The darker shading of color depicts clustering of MPI ranks around the value of the y-axis for every x. The x-axis is the time at which samples were taken by the profiler, whereas y-axis is the time reading from ranks at a particular time stamp. Allinea Map profile of SAGECal-MPI run with four MPI tasks on four nodes with four pthreads per MPI task on each node sharing 64 GB per node. The thread body is specified via a Runnable object passed to the constructor, and the range endpoints must be stored as attributes of the Runnable object.įig. In Java, threads are represented with Thread objects. A pointer to this struct is then passed to the pthread_create function, which creates a thread.

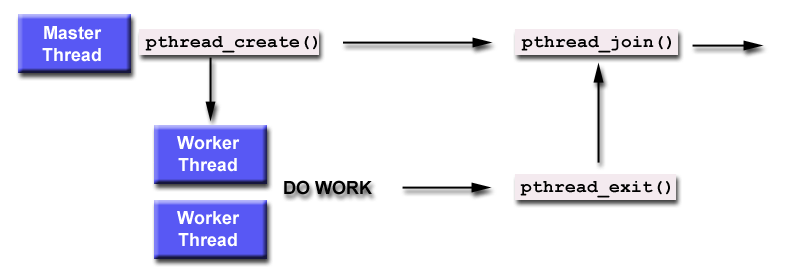

In C, thread functions take only a single argument, so the endpoints must be placed in a struct. These arguments, along with the thread function, are passed to the constructor for std::thread. This is simplest in C++, 2 where the endpoints can be passed as a pair of integer arguments. To do this, the thread function needs to know the endpoints of the range of candidate numbers to check. Each thread will run this function for a different range of candidate numbers. The main part of this is to move the nextCand loop shown in Figure 4.1 into a function for a thread to run. The first step is creating multiple threads. Bunde, in Topics in Parallel and Distributed Computing, 2015 Creating multiple threads However, the ParaC differs from other languages primarily in its conforming to international C standards, fully language based supporting, and supporting scalable parallel programming ranging from fine grain to coarse grain parallelism effectively.ĭavid P.

Pthread c shared memory Pc#

The SPAX operating system, MISIX(MIcrokernel-based Single system image unIX), is based on the combination of Chorus microkernel and UnixWare 2.0.Įxamples of language designed by the similar concept are Cid designed by DEC, pC designed by IBM, Par.C designed by Parsec, and CC++ designed by California Institute of Technology. Our prototype has been tested on the SPAX (Scalable Parallel Architecture based on X-bar network) machine, a highly scalable parallel system. The translated codes are compiled to executable files by a C compiler, and executable files are copied into nodes and are executed as tasks. The ParaC translation system was implemented by C, and lex, yacc is used in part. The ParaC task library is implemented by Chorus IPC (InterProcess Communication), and manages the execution of the tasks and data exchange between tasks. So, each file transforms into C codes using the ParaC task library primitive. Third, a program which is coded by the task constructs is composed of multiple files. A variable declared by the single construct is classified into read and write mode according to its usage at compile time, and is transformed into a ParaC library function for thread synchronization. Second, atomic and lock constructs are directly translated into Pthreads primitives. To support nested parallel programming and recursion, the translator manages each statement independently. Since the primitive allows one argument for parameter passing, local variables used in the statement are structured into one variable and linked to its primitive. First, each statement defined by the parallel constructs transforms into a function definition and the function is called by a Pthreads primitive, pthread_create.

0 kommentar(er)

0 kommentar(er)